Building a vertical AI agent

Apr 29, 2025

Building vertical AI agents varies by domain, but in general they all have similar toolkits to their disposal. In this post we will discuss how we build AI agents at Briefcase. Briefcase automates tasks in the accounting industry where predictability, consistency and accuracy really matters.

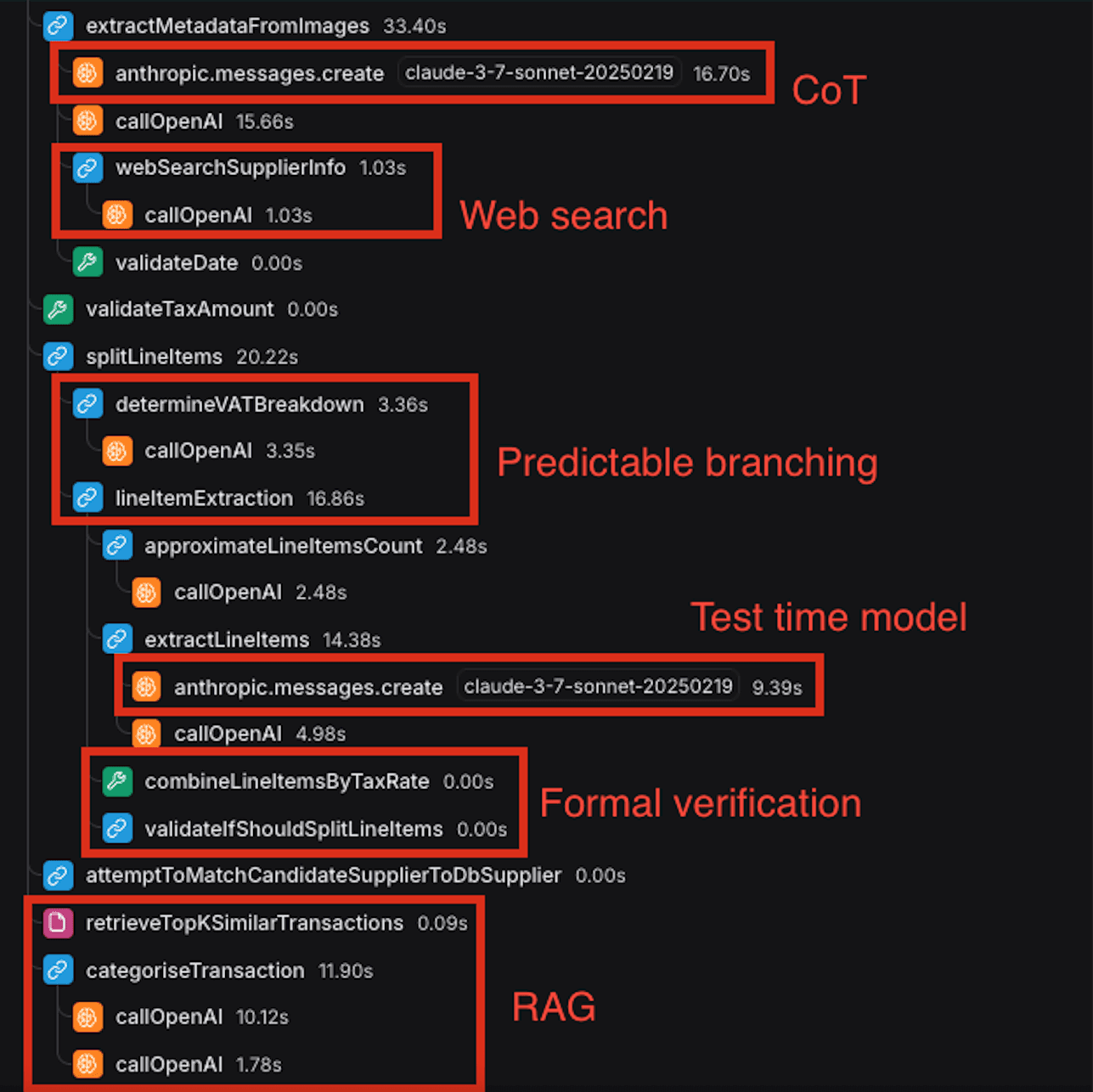

A common workflow for users of Briefcase is to upload their invoices into the system. Briefcase will then perform a bookkeeping workflow on each invoice which consists of multiple reasoning tasks such as extraction, line item split, categorisation and VAT rate assignment. Here's a snippet of our AI agent:

Let’s cover few of these sections.

Chain of Thought

Chain of thought is a very popular technique to help the LLM first reason about the task and only then perform it. This generally speaking improves the quality of the output and reduces hallucinations.

However, they don’t come for free and have their own pros & cons

Latency & Cost: CoT typically increases latency of tasks done by seconds or even 10s of seconds, cost increases proportionally

Consistency & Predictability: CoT significantly helps the LLM solve a task with some degree of predictability that is usually achieved with a few shot examples

Generalisation & Accuracy: We’ve seen CoT significantly improve tackling out of distribution examples for which we haven’t optimised prompts/evals. However, we’ve also seen CoT degrade overall performance where tasks are very niche and prompt steps are too ambiguous

Faithfulness: While LLMs tend to ground their final answer in their reasoning, it’s definitely not always the case and evals for the CoT task should have a faithfulness metric

When we apply CoT

Task is sufficiently difficult such that the LLM hallucinates

Input data to the LLM is highly unstructured

We have enough latency budget for the given task

Prompt structure

We found that this prompt structure works quite well for CoT prompts:

Less is more

Prompt hygiene naturally declines over time as we keep iterating, adding one sentence here and another there to cover new scenarios. The overall coherence of the prompt then decreases and the LLM may start to hallucinate more as a result.

Therefore the way we think about prompting is the same as for everything else in engineering - simplify, simplify, simplify.

best prompt is no prompt

try to reduce prompt first before expanding it

try to explain the problem that you are solving like to 5 year old, if you can’t describe the task in simple enough terms and you leave ambiguity in, the LLM will inevitably hallucinate

keep prompts and examples unambiguous, avoid words like could/may/might

keep system prompts generic and few shots / user prompts specific to individual customer or use case

RAG

Vertical-AI tasks are highly contextual. To automate them, we have to inject task-specific context into each prompt. That’s particularly hard in fields like finance or law, because semantic similarity inside a domain differs from general-purpose similarity in everyday language. Most embedding models are trained for broad use and even vertical models are usually built on data that is still too generic to serve the needs of a single, specialised task.

Embedding space

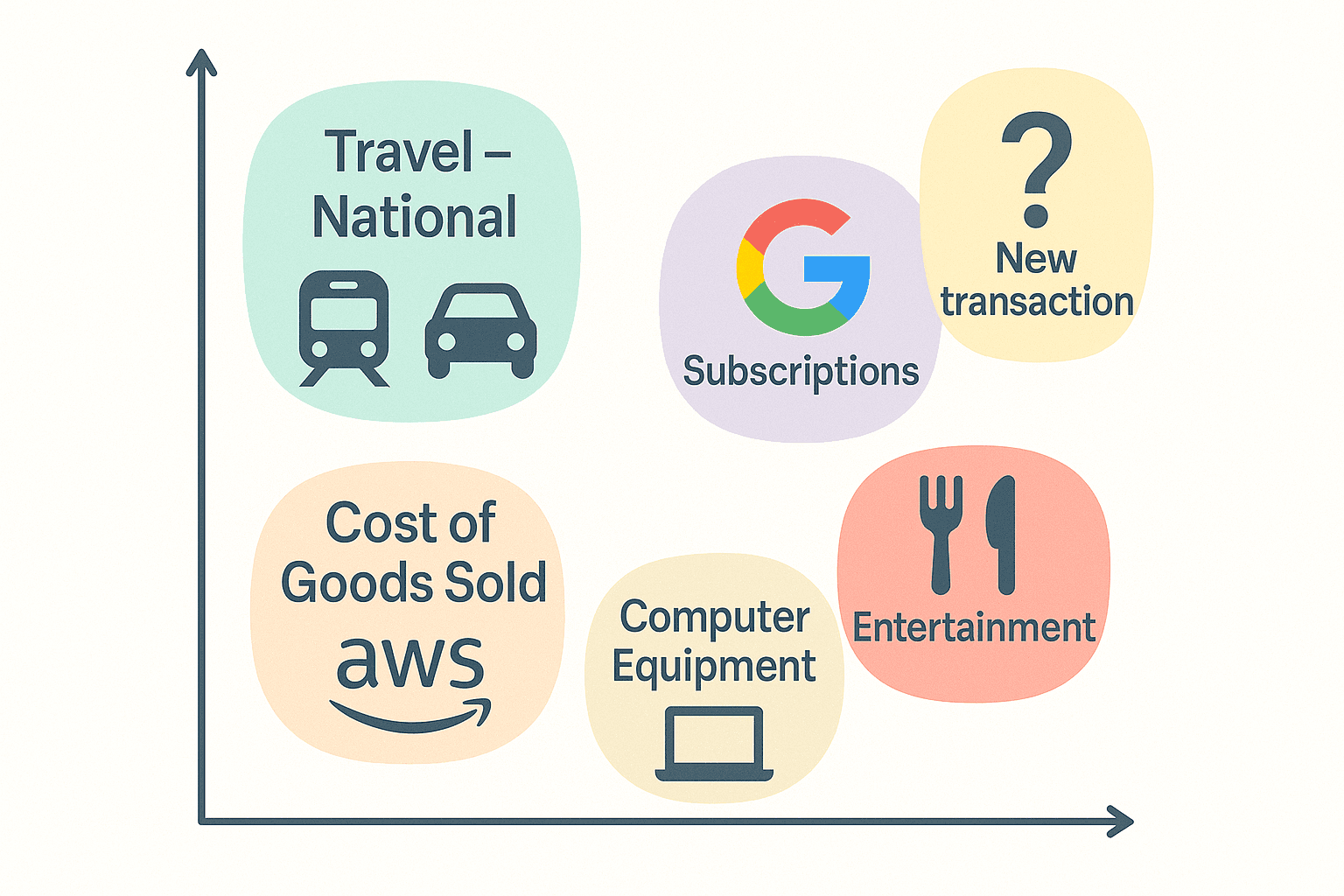

In accounting there is a task called transaction categorisation. The list of categories (or chart of accounts) is bespoke to each individual business and can also evolve and change over time. Here is an example categorisation that can apply to B2B SaaS business:

The company’s purchase of Google Workspace is recorded under Subscriptions, whereas the AWS spend is categorised as Cost of Goods Sold. AWS costs rise with the traffic the SaaS product serves, while Gmail licences scale with headcount rather than incoming traffic.

Injecting the right context

In order for the LLM to categorise transactions with a high degree of consistency and accuracy, it needs examples of previously categorised transactions that are genuinely semantically similar. While a human accountant would be able to semantically distinguish between Google and AWS transactions, even best available embedding models would struggle to tell them apart.

To address this we are applying few techniques

First we spike the strengths and weaknesses of the best finance-aware embedding models and establish base thresholds for false positives and negatives

Experiment with the definition of embedded entity/chunk. We iteratively redefine what counts as a chunk, removing noisy attributes and adding steering metadata to maximise similarity with the right examples and minimise similarity with the wrong ones.

Recalibrate new thresholds with the aim to minimise false positives

On retrieval only consider chunks that are above the recalibrated threshold

Experimenting with chunks is similar to prompt engineering - it’s a lot of trial and error. And similar to prompt engineering, we want to be able to complete the task well for data outside the experimentation/evals dataset, meaning that we need to keep context within chunks generic enough to not overfit.

Even though with a few techniques we can get the most out of the available embedding models, for vertical AI application these often hit their limits. Therefore we will be developing inhouse solutions that are optimised for the financial tasks that we are automating.

—

We will be covering other parts of our agents in future posts. If you came this far and this sounds interesting to you, we are hiring Founding Product Engineers, please apply to jan@briefcase.so.

We are hiring!